Artificial intelligence now also reads the lip: A resource for the hearing impaired?

Train the lip reading with a little help from the speech recognition systems: in this way, you can better understand the ambiguous movements of the lips.

A group of Alibaba researchers, in collaboration with the University of Xhejiang and the Stevens Institute of Technology, has developed a way to read the lip in video material by exploiting artificial intelligence and machine learning, managing to improve significantly the performance compared to methods already developed previously by other researchers. The method was called LIBS, a contraction of Lip by Speech.

The reading of the lip by AI-based technologies and machine learning are nothing new or out of the ordinary: already in 2016 a group of researchers from Google and the University of Oxford illustrated a system capable of annotating video material with a accuracy of 46.8%.

It may seem little, but it is far superior to the accuracy of 12.4% that can be obtained by a human professional in reading the lip. Even state-of-the-art systems have some difficulties in being able to manage those ambiguous small movements of the lips, and this prevents them from being able to reach those levels of accuracy, much higher, typical of speech recognition systems.

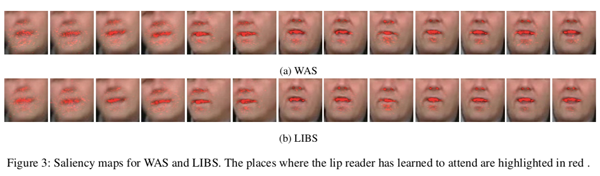

The researchers based the development of LIBS by exploiting the information obtained from the speech recognition systems in the training phases so as to be able to collect complementary clues that can help better interpret those lip movements that are not uniquely attributable to a given pronunciation.

A simpler undertaking to be said than done, as the researchers had to work on the video material used for the training in order to be able to perfectly align video and audio (the tracks often have non-congruous lengths, due to different sampling rates and / or empty frames at the beginning or end of a piece), as well as developing a filtering technique in order to refine the results of the speech recognition system.

In this way the reading of the lip is ” guided ” by the speech recognition system, thanks also to the sensitivity to the context, whether at the frame or video sequence level.

A work that allowed to obtain improvements of 7.66% and 2.75% in the error margin respectively on the CMLR dataset (which contains over 45 thousand sentences taken from BBC video material) and LRS2 (including more than 100 thousand sentences in content broadcast by China Network Television) than possible with previous methods.

Technologies based on systems such as LIBS can represent an important aid for those who are suffering from a form of hearing handicap and find themselves in difficulty following videos that are free of subtitles. To date, around 466 million people worldwide – about 5% of the global population – are affected by a form of hearing impairment. And it is a situation that will worsen: according to the WHO, the number is expected to grow to over 900 million by 2050.